Expeditious NEPA Compliance by the Shortest Applicable Process: How to Do It?

The American Recovery and Reinvestment Act of 2009 (ARRA) requires that adequate resources be devoted to ensuring that applicable environmental reviews are completed on projects funded under the act, that such reviews be completed “on an expeditious basis,” and that “the shortest applicable process allowed under the National Environmental Policy Act be utilized." What does this mean for a local government, federal agency, or private firm involved in ARRA work?

NEPA and Its Kin

The National Environmental Policy Act (NEPA), enacted in 1969, establishes general policy favoring taking care of the environment, and specifically requires that federal agencies consider the effects of work they propose to fund on “the quality of the human environment.” A number of other laws have similar requirements – notably the Endangered Species Act (ESA) and Section 106 of the National Historic Preservation Act (NHPA). All these laws apply to ARRA-funded projects.

The Purpose of Review

The (often forgotten) purpose of review under NEPA and its kin is to see what the environmental impacts will be if the project being reviewed is carried out, and if such impacts are severe, to seek reasonable ways to avoid or reduce them.

Adequate Resources

“Adequate resources” to complete reviews under NEPA generally means enough money and time to carry out studies of potential effects, to consult with the interested public and with experts as needed to define the effects and explore ways to reduce or avoid them, and to implement whatever impact avoidance or reduction schemes are developed. What this means in any particular case, of course, depends on local circumstances.

Shortest Applicable Process

Each federal agency has its own procedures for doing NEPA review, but under the general regulations issued by the Council on Environmental Quality (CEQ) there are three ways to complete review. Which one is appropriate depends on how severe the impacts of the proposed project are likely to be.

Categorical exclusion screening. Many project types are “categorically excluded” from review under NEPA – meaning that in theory, their likely impacts are so minor that there is no need to review them at all. However, because theory is not always reflected by reality, the regulations require that projects thought to fit into excluded categories be screened somehow to determine whether “extraordinary circumstances” exist that require a higher level of review. Exactly how this screening is done – and what happens if extraordinary circumstances are discovered – depends on the NEPA procedures of the relevant federal agency.

Environmental assessment. Where a project is not categorically excluded, but the severity of its impacts isn’t known, the responsible federal agency is required to perform an “environmental assessment” (EA). The EA is supposed to be a “brief but thorough” analysis to determine whether the project’s impacts are likely to be significant. If they aren’t, the agency can issue a “finding of no significant impact” (FONSI) and proceed. If there are likely to be significant impacts, the agency must prepare an environmental impact statement.

Environmental Impact Statement. An environmental impact statement (EIS) is a detailed analysis of a project’s impacts, and of alternatives to avoid or reduce the impacts. NEPA doesn’t prohibit having significant impacts on the environment, but it does require a hard examination of what they are, and real efforts to find ways to avoid or reduce them. The EIS is considered by the agency in deciding whether and how to proceed with the project; once a decision is made, the agency issues a “record of decision” (ROD) and proceeds. The ROD often lays out what is to be done to avoid or reduce impacts.

Complying with NEPA (and its kin) on an ARRA Project

Generally speaking, the shortest NEPA process is screening a categorically excluded project, so – again generally speaking – it’s best to allocate ARRA funds to projects that fall into categories excluded from NEPA review under the relevant agency’s NEPA procedures. However, there are pitfalls in this approach, because depending on the project, there may be “extraordinary circumstances” that cause considerable delay.

The EA and FONSI generally comprise the next shortest NEPA process, but here again if there are significant impacts, they can lead to significant delays. The EIS-ROD is usually the most complicated, longest form of NEPA review.

The worst delays of all result from trying to sweep impacts under the rug, or from conducting a “quickie” analysis that doesn’t look fully and comprehensively at the project’s potential impacts and seek ways to resolve them – unless, of course, you’re successful in doing so.

As a general rule, it is wisest to assume that impacts will occur, and allocate the resources necessary to identify and resolve them, as an orderly part of your project. Remember, NEPA and most of its kin don't prohibit having environmental impacts; they simply require that they be acknowledged, revealed to and discussed with the public, and resolved somehow if there's a way to do so.

Don’t Forget Those Kin

In carrying out review under NEPA, don’t forget all the related laws – ESA, Section 106 of NHPA, the Clean Air Act, the Clean Water Act, a host of others. Review under these laws is just as mandatory as is review under NEPA, and one does not substitute for another. In other words, the fact that you’ve complied with NEPA doesn’t mean you’ve complied with NHPA or ESA or any of the others – unless you have, following the regulations relevant to each of them.

What If Your Project is “Shovel-Ready?”

There’s been a lot of talk in the run-up to ARRA about funding only projects that are “shovel-ready.” Presumably this means projects on which, among other things, NEPA review has been completed. If review under NEPA and its kin has already been completed on your project, that’s definitely a good thing, but be careful. It’s not always easy to know whether review really has been completed. Sometimes NEPA has apparently been complied with but Section 106 of NHPA or some other review requirement has not. Sometimes the law was “complied with” years ago, and since then laws or circumstances have changed, creating the need for further review. For example, no one was giving much consideration ten years ago to a project’s potential impacts on climate change, but now it’s a big issue. Similarly , it’s only been in the last decade or so that NEPA analysts have begun systematically to examine “environmental justice” issues – will this project have disproportionate adverse impacts on the environments of low income or minority people? “Cumulative effects” – how this project’s effects will contribute to the general pattern of change in a resource or an area – is another issue that has begun to get much more attention in recent years than in the past; it’s been the basis for a good deal of project-stopping litigation. So a project that was “shovel-ready” a decade ago may not be so ready today; older impact analyses may need some tuning-up.

Who Can Help (or Hurt) You?

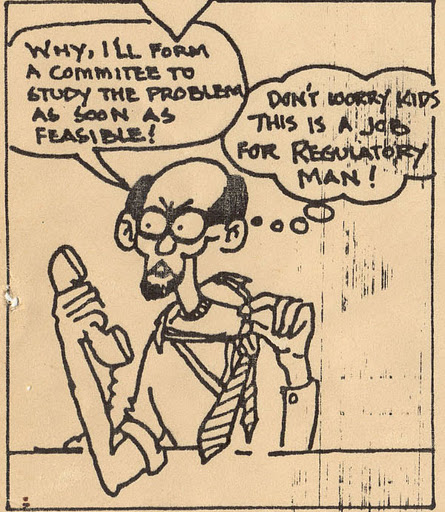

There are innumerable consulting firms eager to help you complete NEPA review on your projects, but be careful. The environmental impact assessment (EIA) industry is not very well regulated or overseen by government or anyone else. As a result, there are a lot of not-particularly competent, and not-especially ethical, companies out there. Different companies can also mean different things when they say they do “environmental” assessments. Some companies are essentially engineering firms that focus on solving problems of air and water pollution; others pay close attention to impacts on natural resources but don’t deal very well with, say, impacts on the urban environment or historic buildings, while others know how to deal with the built environment but aren’t very good with plants and animals. You need to do some research to find the right kind of help. Checking with state and local regulators is a good way to start, but there is no simple, universally applicable way to make sure you get the right kind of assistance. A lot depends simply on thinking about what you propose to do and what it’s going to do to the world. Will it dig up a lot of ground? Knock down or modify a building or neighborhood? Remove a lot of trees? Pump stuff into the air or water? Displace people? Then design a scope of work that focuses on assessing the impacts of such changes, and look for firms that can demonstrate the ability to assess them.

The Prime Directive: Don’t Wait!

The one certain thing about review under NEPA and its kin is that the sooner you get started on it, the faster and more reliably you’re likely to get it done. Conversely, if you wait – if you treat the reviews as something you can check off your list late in the planning process, you’re likely to get into trouble that will delay or even kill your project. So if you’re considering an ARRA-funded project, and you’re not sure that NEPA and the other environmental review laws have already been complied with, then get to work NOW figuring out what kinds of reviews are necessary and getting them underway.

Addendum: Per Active's suggestion below, here's a link to the energy and environment forum, where ARRA and its environmental implications will be further discussed: www.energyenvironmentforum.com

Sunday, February 15, 2009

Wednesday, February 11, 2009

Our Unprotected Heritage in a Bookstore Near You

Left Coast Press (www.lcoastpress.com) has just published my latest book – Our Unprotected Heritage: Whitewashing Destruction of Our Cultural and Natural Environment. Probably destined to make me thoroughly unemployable in the environmental impact assessment (EIA) and cultural resource management (CRM) industries, it’s an exposé on how those industries have become corrupted since their inceptions back in the 1970s. A familiar topic to readers of this blog, I realize, but Unprotected makes, I hope, a rather more fully developed case, and concludes with concrete recommendations to President Obama. Who hasn’t said whether he’ll review it, but who knows?

Sunday, February 08, 2009

Gimme Gimme Gimme: the ACHP's January 16 Recommendations

I’m grateful to Al Tonetti of the ASC Group in Columbus, Ohio for alerting me to the Advisory Council on Historic Preservation’s (ACHP) new report (issued January 16, 2009, entitled “Recommendations to Improve the Structure of the Federal Historic Preservation Program” and accessible at http://www.achp.gov/news090201.html. Although the ACHP issued a press release on the report, it has not made much of it – possibly because it has the good sense to be embarrassed by it.

The report is not worth spending much time to review, but let’s just run through its recommendations. Most are the kind of thing you expect from any federal agency given (by itself in this case) the opportunity to call for an improvement in its own status and that of its political allies. Coming out at the time it did, though – four days before the inauguration of a president whose election repudiated the then-incumbant who appointed the ACHP’s members, in the midst of the worst international economic disaster in memory, the report is remarkable for its naïve self-promotion.

Recommendation 1: Build up the power of the ACHP. The ACHP recommends that it be headed by a chairperson appointed by the President (as is now the case) with the advice and consent of the Senate (as is not now the case). It also recommends that the chairperson be made a full-time federal employee and that he or she serve on the Domestic Policy Council (DPC). In other words, the ACHP should be recognized as having an influence on national domestic policy equivalent to that exercised by such regular DPC members as the Secretary of Labor, the Secretary of Transportation, the administrator of the Environmental Protection Agency, and the chairperson of the Council of Economic Advisors.

It is not clear why – after frittering away the last eight years patting itself on the back for giving “preserve America” grants to worthy, noncontroversial projects – the ACHP thinks it has anything useful to say about federal domestic policy. To the extent it provides a rationale for its recommendation, the ACHP stresses the importance of the Section 106 review process – precisely the aspect of its authority and responsibility that it has most thoroughly neglected in recent years.

Recommendation 2: Create an Office of Preservation Policy and Procedure (OPPP) in the Office of the Secretary of the Interior. The OPPP would “provide leadership and direction” to Interior agencies in carrying out their responsibilities under the National Historic Preservation Act (NHPA). There are some implications in the report that the OPPP would take over the interagency functions of the National Park Service (NPS) – maintaining the National Register of Historic Places, overseeing project certification for tax benefits, and so on. There would be some benefit in such a reorganization; the “external” programs have never been a happy fit within NPS. But the report doesn’t really go beyond implying such a change. Given NPS's predictable response to any assault on its turf, it is most likely that the OPPP would become simply another level of bureaucracy within the Interior Department.

Recommendation 3: Create an Associate Director for Cultural Resources within the Council on Environmental Quality (CEQ). The rationale for this proposal is to improve the “profile” of historic preservation within the Executive Office of the President. This is doubtless a worthy goal from the standpoint of historic preservation, but it is hardly sufficient. Kite-flyers, all-terrain vehicle drivers, and gardeners would probably like to have their profiles enhanced, too, but this is hardly justification for the assignment of high-level CEQ officials to represent their interests. The ACHP does point out that the National Environmental Policy Act (NEPA) is designed to promote the protection of historic and cultural as well as natural resources, but it makes little or no case for the proposition either that CEQ currently gives such resources short shrift or that adding another official to that body would improve matters.

Recommendation 4: Beef up the Federal Preservation Officers. The ACHP points back to Executive Order 13287, the Bush administration's one legitimate effort to promote federal agency responsibility toward historic preservation, and says its provisions should be implemented. The vehicle it proposes for getting this done is for the ACHP's chairperson and the Office of Management and Budget to make it happen. Since the executive order, the chairperson, and OMB have been around for some years, one wonders why this recommendation has to be made; why has it not already been realized?

Recommendation 5: More money. It wouldn’t be a federal agency recommendation if it didn’t seek more money for agency programs, or for those of its allies. The ACHP report predictably recommends increased funding for the State Historic Preservation Officers (SHPOs) and Tribal Historic Preservation Officers (THPOs). Without getting into whether such increases might be justified (I wouldn’t assume them so without a hard review of how the THPOs and especially the SHPOs are spending the money they already have, under NPS’s generally misguided rules), it is hard to imagine that such increases are very likely under current economic circumstances.

Recommendation 6: More money and technical support to THPOs. The THPOs get a second bite of the ACHP’s proposed funding apple, though it’s not clear how the fiscal part of this recommendation differs from the THPO part of the preceding one. The THPOs certainly do need more money, but they also need relief from pointless NPS regulation and mindless federal agency pretenses at “tribal consultation.” What they probably do not need is “technical support,” if this means – as it usually does – support in meeting NPS administrative and technical requirements.

Recommendation 7: Strengthen Section 106. This one – while not unjustified as far as it goes – is truly mind-boggling, particularly in the way it is phrased: “The Section 106 Function is Lagging, and Must be Strengthened.” Indeed, and why is it lagging? Does the lag have anything to do with the fact that the ACHP’s political and executive leadership has done everything in their power over the last two decades to minimize attention to Section 106? Does it reflect in any way the total lack of creative thought that the ACHP has given to Section 106 review in recent years? Does it have anything to do with the antagonism the ACHP leadership has shown those members of its staff who actually try to participate in review or encourage improved practice on the part of federal agencies and others? Not in the ACHP’s eyes, of course, so what should be done to “strengthen” Section 106? Provide “additional resources to support the ACHP’s crucial role.” Give us more money.

The ACHP goes on to propose that it ought to issue more guidance – which perhaps is true (though one cannot be sanguine about what it might issue), but if it is, why doesn’t the ACHP simply issue some? In a way it does, via this report, proposing better coordination between Section 106 and NEPA and offering some useful if hardly revolutionary suggestions: get 106 review underway before issuing environmental assessments or draft environmental impact statements, finish it before such documents are finalized, and don’t confuse the vacuous “public participation” typically provided for under NEPA with the consultation required under Section 106. It’s too bad the ACHP hasn’t done something over the last ten years to arrest the deterioration of the very consultation it now (by vague implication) extols.

This is a sad, predictable, silly report, which will doubtless get precisely the attention it deserves. I wonder how much of our tax money was spent producing it.

The report is not worth spending much time to review, but let’s just run through its recommendations. Most are the kind of thing you expect from any federal agency given (by itself in this case) the opportunity to call for an improvement in its own status and that of its political allies. Coming out at the time it did, though – four days before the inauguration of a president whose election repudiated the then-incumbant who appointed the ACHP’s members, in the midst of the worst international economic disaster in memory, the report is remarkable for its naïve self-promotion.

Recommendation 1: Build up the power of the ACHP. The ACHP recommends that it be headed by a chairperson appointed by the President (as is now the case) with the advice and consent of the Senate (as is not now the case). It also recommends that the chairperson be made a full-time federal employee and that he or she serve on the Domestic Policy Council (DPC). In other words, the ACHP should be recognized as having an influence on national domestic policy equivalent to that exercised by such regular DPC members as the Secretary of Labor, the Secretary of Transportation, the administrator of the Environmental Protection Agency, and the chairperson of the Council of Economic Advisors.

It is not clear why – after frittering away the last eight years patting itself on the back for giving “preserve America” grants to worthy, noncontroversial projects – the ACHP thinks it has anything useful to say about federal domestic policy. To the extent it provides a rationale for its recommendation, the ACHP stresses the importance of the Section 106 review process – precisely the aspect of its authority and responsibility that it has most thoroughly neglected in recent years.

Recommendation 2: Create an Office of Preservation Policy and Procedure (OPPP) in the Office of the Secretary of the Interior. The OPPP would “provide leadership and direction” to Interior agencies in carrying out their responsibilities under the National Historic Preservation Act (NHPA). There are some implications in the report that the OPPP would take over the interagency functions of the National Park Service (NPS) – maintaining the National Register of Historic Places, overseeing project certification for tax benefits, and so on. There would be some benefit in such a reorganization; the “external” programs have never been a happy fit within NPS. But the report doesn’t really go beyond implying such a change. Given NPS's predictable response to any assault on its turf, it is most likely that the OPPP would become simply another level of bureaucracy within the Interior Department.

Recommendation 3: Create an Associate Director for Cultural Resources within the Council on Environmental Quality (CEQ). The rationale for this proposal is to improve the “profile” of historic preservation within the Executive Office of the President. This is doubtless a worthy goal from the standpoint of historic preservation, but it is hardly sufficient. Kite-flyers, all-terrain vehicle drivers, and gardeners would probably like to have their profiles enhanced, too, but this is hardly justification for the assignment of high-level CEQ officials to represent their interests. The ACHP does point out that the National Environmental Policy Act (NEPA) is designed to promote the protection of historic and cultural as well as natural resources, but it makes little or no case for the proposition either that CEQ currently gives such resources short shrift or that adding another official to that body would improve matters.

Recommendation 4: Beef up the Federal Preservation Officers. The ACHP points back to Executive Order 13287, the Bush administration's one legitimate effort to promote federal agency responsibility toward historic preservation, and says its provisions should be implemented. The vehicle it proposes for getting this done is for the ACHP's chairperson and the Office of Management and Budget to make it happen. Since the executive order, the chairperson, and OMB have been around for some years, one wonders why this recommendation has to be made; why has it not already been realized?

Recommendation 5: More money. It wouldn’t be a federal agency recommendation if it didn’t seek more money for agency programs, or for those of its allies. The ACHP report predictably recommends increased funding for the State Historic Preservation Officers (SHPOs) and Tribal Historic Preservation Officers (THPOs). Without getting into whether such increases might be justified (I wouldn’t assume them so without a hard review of how the THPOs and especially the SHPOs are spending the money they already have, under NPS’s generally misguided rules), it is hard to imagine that such increases are very likely under current economic circumstances.

Recommendation 6: More money and technical support to THPOs. The THPOs get a second bite of the ACHP’s proposed funding apple, though it’s not clear how the fiscal part of this recommendation differs from the THPO part of the preceding one. The THPOs certainly do need more money, but they also need relief from pointless NPS regulation and mindless federal agency pretenses at “tribal consultation.” What they probably do not need is “technical support,” if this means – as it usually does – support in meeting NPS administrative and technical requirements.

Recommendation 7: Strengthen Section 106. This one – while not unjustified as far as it goes – is truly mind-boggling, particularly in the way it is phrased: “The Section 106 Function is Lagging, and Must be Strengthened.” Indeed, and why is it lagging? Does the lag have anything to do with the fact that the ACHP’s political and executive leadership has done everything in their power over the last two decades to minimize attention to Section 106? Does it reflect in any way the total lack of creative thought that the ACHP has given to Section 106 review in recent years? Does it have anything to do with the antagonism the ACHP leadership has shown those members of its staff who actually try to participate in review or encourage improved practice on the part of federal agencies and others? Not in the ACHP’s eyes, of course, so what should be done to “strengthen” Section 106? Provide “additional resources to support the ACHP’s crucial role.” Give us more money.

The ACHP goes on to propose that it ought to issue more guidance – which perhaps is true (though one cannot be sanguine about what it might issue), but if it is, why doesn’t the ACHP simply issue some? In a way it does, via this report, proposing better coordination between Section 106 and NEPA and offering some useful if hardly revolutionary suggestions: get 106 review underway before issuing environmental assessments or draft environmental impact statements, finish it before such documents are finalized, and don’t confuse the vacuous “public participation” typically provided for under NEPA with the consultation required under Section 106. It’s too bad the ACHP hasn’t done something over the last ten years to arrest the deterioration of the very consultation it now (by vague implication) extols.

This is a sad, predictable, silly report, which will doubtless get precisely the attention it deserves. I wonder how much of our tax money was spent producing it.

Thursday, February 05, 2009

Quantifying and Enforcing Irrelevance: The Proposed “Performance Measures for Historic Preservation.”

A report just issued by the National Academy of Public Administration (NAPA) – Towards More Meaningful Performance Measures for Historic Preservation -- is worth examination by anyone concerned about the effectiveness, utility, and burdensomeness of the national historic preservation program.

Not that it’s a good report. It’s a dreadful report, and its preparation was a classic waste of taxpayer dollars on bureaucratic naval-gazing. But it’s worth looking at because it neatly encapsulates (and seeks to perpetuate) much of what’s made the national program as marginally relevant and maximally inefficient as it is. And because it places the program’s inanities in a larger public administration context, which helps account for them.

The idea of establishing and applying hard and fast quantitative performance measures has been popular in public administration for some time. Conceived in commercial and industrial contexts where they make a certain amount of sense –how many widgets you produce or sell are clearly relevant measures of a factory worker’s or salesperson’s performance --such measures began to be applied with a vengeance to the performance of federal employees during the time I was in government back in the 1980s. Of course, they didn’t work, because most federal agencies are designed to do things other than produce widgets. So they kept being reworked, rethought, but seldom if ever made more useful. We senior management types found ourselves required almost annually to reformulate the standards we applied in judging how our staffs were doing; it became something of a joke, but was taken with great seriousness by the Office of Management and Budget, and therefore by all the agencies. Soon the plague spread to the measurement of performance by government grant holders such as State Historic Preservation Officers (SHPOs), and beyond government and business to many other sectors of society. Hence programs like “No Child Left Behind,” transforming our schools into factories for the production of test-takers.

There’s no question that government, like business (and school districts), needs to find ways of judging performance, both by programs and by employees. But the application of simplistic quantitative measures is often a waste of time, because what government does (like what a truly good school does) is not easily reduced to countable widgets. Moreover, a focus on quantitative measures can and does distract management from major non-quantitative issues that may confront and beset an agency. It is also a fact – most succinctly formulated in quantum physics, but very evident in public administration – that the act of measuring the performance of a variable affects the way that variable performs. This principle seems to be routinely ignored, or given very short shrift, by performance measure mavens; its doleful effects are very apparent in the way the national historic preservation program works (or fails to).

OK, let’s get specific. First, the NAPA report gives us nothing new; it largely regurgitates standards that have been used for decades by the National Park Service (NPS) in judging SHPOs, and by some land management agencies (and perhaps others) in purporting to measure their own performance. The report was not in fact prepared by NAPA; NAPA simply staffed a committee made up of the usual suspects – three NPS employees, two staffers from the Advisory Council on Historic Preservation (ACHP), three SHPOs, the Federal Highway Administration’s federal preservation officer, one local government representative, three tribal representatives, and one person from what appears to be a consulting firm. Most of the names are very familiar; they’ve been around in their programs for decades. The tribal representatives seem to have focused, understandably and as usual, on making sure the tribes would be minimally affected by the standards; there are a couple of pages of caveats about how different the tribes are. So in essence, the standards were cooked up by NPS, the ACHP, one federal agency, one local government, and a consultant. No wonder they merely tweak existing measures.

So what are the measures? How should the performance of historic preservation programs be measured? Let’s look at those assigned “highest priority” by the report.

The first performance measure is “number of properties inventoried and evaluated as having actual or potential historic value.” This one has been imposed on SHPOs for a long time, and is one of the reasons SHPOs think they have to review every survey report, and insist on substantial documentation of every property, while their staffs rankle at having to perform a lot of meaningless paperwork. Of course, it also encourages hair-splitting; you don’t want to recognize the significance of an expansive historic landscape if counting all the individual “sites” in it will get you more performance points. The report leaves the reader to imagine how it is thought to reflect the quality of a program’s performance.

The next one is “number of properties given historic designation.” We are not told why designating something improves the thing designated or anything else; it is merely assumed that designation is a Good Thing. This measure – also long applied in one form or another to SHPOs – largely accounts for SHPO insistence that agencies and others nominate things to the National Register, regardless of whether doing so serves any practical purpose.

Next comes – get ready – “number of properties protected.” We are given no definition of “protected,” and again it is assumed without justification that “protection” is a good thing. And apparently protecting a place that nobody really gives a damn about is just as good as protecting one a community, a tribe, or a neighborhood truly treasures – as long as it meets the National Register’s criteria. Of course, none of the parties whose performance might be measured – SHPOs, agencies, tribes – has any realistic way of knowing how many properties are “protected” over a given time period, unless they find a way to impose incredibly burdensome reporting requirements. This probably won’t happen; they’ll make something up.

The next three reflect the predilections of the ACHP, though they’ve been around in SHPO standards for decades: “number of … finding(s) of no adverse effect,” “number of … finding(s) of adverse effect,” and “number of Section 106 programmatic agreements.” For some reason memoranda of agreement are apparently not to be counted. Nor are cases in which effects are avoided altogether, despite the fact that such cases presumably “protect” properties more effectively than any of the case-types that are to be counted. The overall effect of these measures is to promote a rigid, check-box approach to Section 106 review, and to canonize the notion that programmatic agreements are ipso facto good things.

The next one is “private capital leveraged by federal historic preservation tax credits,” which seems relatively sensible to me where such tax credits are involved – though it obviously imposes a record-keeping burden on whoever keeps the records (presumably SHPOs and NPS).

The last two high priority measures are “number of historic properties for which information is available on the internet” and “number of visitors to historic preservation websites.” The first measure, where it can be applied, would seem to favor entities with lots of historic properties and a predilection for recording them. The second seems almost sensible to me, but I should think it would present measurement problems – just what is an “historic preservation website?” The report “solves” this problem in a simplistic way by referring in detail only to SHPO, THPO, and NPS websites. This, of course, makes the measure wholly irrelevant to land management agencies, local governments, and others who maintain such sites.

There are other measures assigned lower priority by the report, but they’re pretty much of a piece with those just described.

It’s easy to see that these measures are pointless, that it was a waste of time to produce them. But they’re worse than useless because to the extent they’re actually applied, they require program participants to spend their time counting widgets rather than actually performing and improving program functions. And as noted, some of them encourage nit-picking, hair-splitting, over-documentation and inflexibility. They create systems in which participants spend their time – and insist that others spend their time – doing things that relate to the measures, as opposed to things that accomplish the purposes of the National Historic Preservation Act. Or any other public purpose.

“OK,” I hear the authors of the report fulminating – in the unlikely event they trouble themselves to read this – “so enough of your kvetching, King, where’s your alternative?” Indeed – given that there is a need to promote good performance in historic preservation programs, how can we “measure” it?

I put “measure” in quotes because I don’t think quantitative measures work – unless you truly are producing or disposing of widgets, which is not mostly what government does. But whether we can “measure” performance or not, I think we can judge performance.

Taking SHPOs as an example, suppose the NPS grant program procedures for SHPOs required each SHPO to have an annual public stakeholder conference in which all those who had had dealings with the SHPO over the preceding year got together with the SHPO (or in some contexts perhaps without him or her) and performed a critique. Make sure the public is aware of the conference and able to participate, so people who may have wanted to do something with the SHPO but been stiffed, or felt they’d been, could also take part. Maybe have a knowledgeable outside facilitator and a set of basic questions to explore, program areas to consider. How satisfied are you with the way the SHPO is representing your interests in historic properties, how sensitive the SHPO is to your economic or other needs, how creative the SHPO is in finding solutions to problems? How do you like the way the SHPO is handling Section 106 review, the National Register, tax act certifications, provision of public information, treatment of local governments, tribal concerns? Do a report, and if we must have quantification, assign a score or set of scores – on a scale of one to ten, this SHPO is an eight. File the report with NPS, which can consider it in deciding how much money each SHPO will get next year.

There are doubtless other ways to do it – probably lots of ways, and some may make more sense than what I’ve just suggested. We ought to consider them – or someone should. What we should not do is remain tied to something as thoroughly idiotic as rating the performance of inherently non-quantitative programs based on how many widgets they’ve produced.

Not that it’s a good report. It’s a dreadful report, and its preparation was a classic waste of taxpayer dollars on bureaucratic naval-gazing. But it’s worth looking at because it neatly encapsulates (and seeks to perpetuate) much of what’s made the national program as marginally relevant and maximally inefficient as it is. And because it places the program’s inanities in a larger public administration context, which helps account for them.

The idea of establishing and applying hard and fast quantitative performance measures has been popular in public administration for some time. Conceived in commercial and industrial contexts where they make a certain amount of sense –how many widgets you produce or sell are clearly relevant measures of a factory worker’s or salesperson’s performance --such measures began to be applied with a vengeance to the performance of federal employees during the time I was in government back in the 1980s. Of course, they didn’t work, because most federal agencies are designed to do things other than produce widgets. So they kept being reworked, rethought, but seldom if ever made more useful. We senior management types found ourselves required almost annually to reformulate the standards we applied in judging how our staffs were doing; it became something of a joke, but was taken with great seriousness by the Office of Management and Budget, and therefore by all the agencies. Soon the plague spread to the measurement of performance by government grant holders such as State Historic Preservation Officers (SHPOs), and beyond government and business to many other sectors of society. Hence programs like “No Child Left Behind,” transforming our schools into factories for the production of test-takers.

There’s no question that government, like business (and school districts), needs to find ways of judging performance, both by programs and by employees. But the application of simplistic quantitative measures is often a waste of time, because what government does (like what a truly good school does) is not easily reduced to countable widgets. Moreover, a focus on quantitative measures can and does distract management from major non-quantitative issues that may confront and beset an agency. It is also a fact – most succinctly formulated in quantum physics, but very evident in public administration – that the act of measuring the performance of a variable affects the way that variable performs. This principle seems to be routinely ignored, or given very short shrift, by performance measure mavens; its doleful effects are very apparent in the way the national historic preservation program works (or fails to).

OK, let’s get specific. First, the NAPA report gives us nothing new; it largely regurgitates standards that have been used for decades by the National Park Service (NPS) in judging SHPOs, and by some land management agencies (and perhaps others) in purporting to measure their own performance. The report was not in fact prepared by NAPA; NAPA simply staffed a committee made up of the usual suspects – three NPS employees, two staffers from the Advisory Council on Historic Preservation (ACHP), three SHPOs, the Federal Highway Administration’s federal preservation officer, one local government representative, three tribal representatives, and one person from what appears to be a consulting firm. Most of the names are very familiar; they’ve been around in their programs for decades. The tribal representatives seem to have focused, understandably and as usual, on making sure the tribes would be minimally affected by the standards; there are a couple of pages of caveats about how different the tribes are. So in essence, the standards were cooked up by NPS, the ACHP, one federal agency, one local government, and a consultant. No wonder they merely tweak existing measures.

So what are the measures? How should the performance of historic preservation programs be measured? Let’s look at those assigned “highest priority” by the report.

The first performance measure is “number of properties inventoried and evaluated as having actual or potential historic value.” This one has been imposed on SHPOs for a long time, and is one of the reasons SHPOs think they have to review every survey report, and insist on substantial documentation of every property, while their staffs rankle at having to perform a lot of meaningless paperwork. Of course, it also encourages hair-splitting; you don’t want to recognize the significance of an expansive historic landscape if counting all the individual “sites” in it will get you more performance points. The report leaves the reader to imagine how it is thought to reflect the quality of a program’s performance.

The next one is “number of properties given historic designation.” We are not told why designating something improves the thing designated or anything else; it is merely assumed that designation is a Good Thing. This measure – also long applied in one form or another to SHPOs – largely accounts for SHPO insistence that agencies and others nominate things to the National Register, regardless of whether doing so serves any practical purpose.

Next comes – get ready – “number of properties protected.” We are given no definition of “protected,” and again it is assumed without justification that “protection” is a good thing. And apparently protecting a place that nobody really gives a damn about is just as good as protecting one a community, a tribe, or a neighborhood truly treasures – as long as it meets the National Register’s criteria. Of course, none of the parties whose performance might be measured – SHPOs, agencies, tribes – has any realistic way of knowing how many properties are “protected” over a given time period, unless they find a way to impose incredibly burdensome reporting requirements. This probably won’t happen; they’ll make something up.

The next three reflect the predilections of the ACHP, though they’ve been around in SHPO standards for decades: “number of … finding(s) of no adverse effect,” “number of … finding(s) of adverse effect,” and “number of Section 106 programmatic agreements.” For some reason memoranda of agreement are apparently not to be counted. Nor are cases in which effects are avoided altogether, despite the fact that such cases presumably “protect” properties more effectively than any of the case-types that are to be counted. The overall effect of these measures is to promote a rigid, check-box approach to Section 106 review, and to canonize the notion that programmatic agreements are ipso facto good things.

The next one is “private capital leveraged by federal historic preservation tax credits,” which seems relatively sensible to me where such tax credits are involved – though it obviously imposes a record-keeping burden on whoever keeps the records (presumably SHPOs and NPS).

The last two high priority measures are “number of historic properties for which information is available on the internet” and “number of visitors to historic preservation websites.” The first measure, where it can be applied, would seem to favor entities with lots of historic properties and a predilection for recording them. The second seems almost sensible to me, but I should think it would present measurement problems – just what is an “historic preservation website?” The report “solves” this problem in a simplistic way by referring in detail only to SHPO, THPO, and NPS websites. This, of course, makes the measure wholly irrelevant to land management agencies, local governments, and others who maintain such sites.

There are other measures assigned lower priority by the report, but they’re pretty much of a piece with those just described.

It’s easy to see that these measures are pointless, that it was a waste of time to produce them. But they’re worse than useless because to the extent they’re actually applied, they require program participants to spend their time counting widgets rather than actually performing and improving program functions. And as noted, some of them encourage nit-picking, hair-splitting, over-documentation and inflexibility. They create systems in which participants spend their time – and insist that others spend their time – doing things that relate to the measures, as opposed to things that accomplish the purposes of the National Historic Preservation Act. Or any other public purpose.

“OK,” I hear the authors of the report fulminating – in the unlikely event they trouble themselves to read this – “so enough of your kvetching, King, where’s your alternative?” Indeed – given that there is a need to promote good performance in historic preservation programs, how can we “measure” it?

I put “measure” in quotes because I don’t think quantitative measures work – unless you truly are producing or disposing of widgets, which is not mostly what government does. But whether we can “measure” performance or not, I think we can judge performance.

Taking SHPOs as an example, suppose the NPS grant program procedures for SHPOs required each SHPO to have an annual public stakeholder conference in which all those who had had dealings with the SHPO over the preceding year got together with the SHPO (or in some contexts perhaps without him or her) and performed a critique. Make sure the public is aware of the conference and able to participate, so people who may have wanted to do something with the SHPO but been stiffed, or felt they’d been, could also take part. Maybe have a knowledgeable outside facilitator and a set of basic questions to explore, program areas to consider. How satisfied are you with the way the SHPO is representing your interests in historic properties, how sensitive the SHPO is to your economic or other needs, how creative the SHPO is in finding solutions to problems? How do you like the way the SHPO is handling Section 106 review, the National Register, tax act certifications, provision of public information, treatment of local governments, tribal concerns? Do a report, and if we must have quantification, assign a score or set of scores – on a scale of one to ten, this SHPO is an eight. File the report with NPS, which can consider it in deciding how much money each SHPO will get next year.

There are doubtless other ways to do it – probably lots of ways, and some may make more sense than what I’ve just suggested. We ought to consider them – or someone should. What we should not do is remain tied to something as thoroughly idiotic as rating the performance of inherently non-quantitative programs based on how many widgets they’ve produced.

Subscribe to:

Posts (Atom)